Making Engineering Performance Reviews Fair

As an inexperienced technical founder at Collage.com, the first big growing pain was engineer performance – or lack thereof. We had expanded at break-neck speed, and I had written break-neck code with little regard for technical debt or engineering process.

As others joined the team, they struggled to work within the code base and develop features at a reasonable pace. Their code frequently had bugs due to lack of test automation and rarely met expectations due to lack of planning and specification.

When it came time for performance reviews, their lack of output was undeniable, and I unfairly placed the burden on them.

While a very senior engineer may have been able to operate in this environment, the lack of performance was primarily a management problem, not an individual problem.

Many start-ups face similar challenges and all too often come to the same faulty conclusion that I did: that engineers are fully responsible for their output, not their managers.

This article focuses on understanding external impediments to engineering performance to ensure that engineers receive the support they need to reach their full potential.

Engineering Metrics

Fortunately, a lot has changed recently in the world of engineering metrics, which can now offer deeper insight into issues that interfere with productivity.

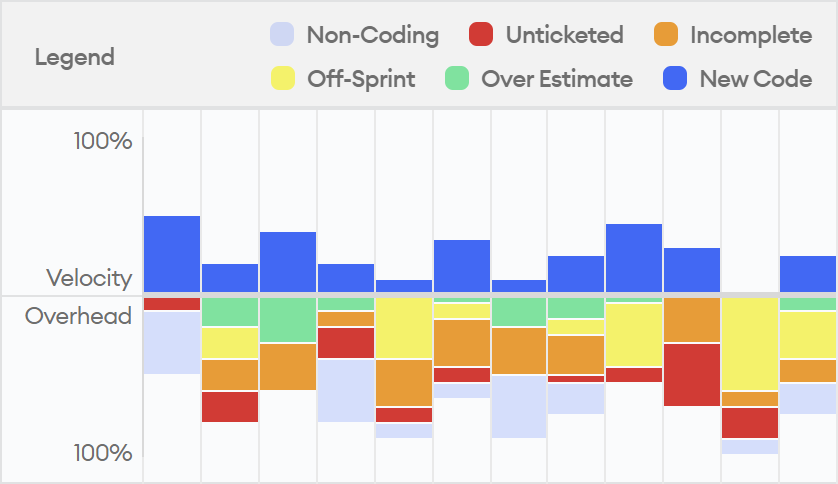

The first thing to look at when assessing engineering performance is how people spend their time. This chart from minware shows engineering effort broken down into different categories. Blue represents time engineers have available to complete work that is easy for managers to see:

Working Under the Radar

Non-coding activities like meetings, training, and hiring can take significant time away from development work, and can disproportionately affect different team members.

Another common anti-pattern is large amounts of unticketed or unplanned work (off-sprint if using scrum). In the hectic world of high-growth engineering, people can end up spending a lot of time responding to unstructured requests from across the organization and dealing with urgent bugs.

Sadly, this thankless work often goes unrecognized and leaves managers wondering why engineers were unable to ship the next feature.

Process Problems

The next big impediment to engineering performance is a lack of process. If people don’t have clear expectations and proper guidance, it is impossible for them to be productive.

Enemy number one of predictable engineering is large tasks. It is very hard for everyone to agree on requirements and track progress if work is denominated in weeks or months rather than hours and days.

Here, it is helpful to first make sure that there are tickets for each task in a system like Jira, and that those tickets have some form of size estimate. Without this, it is unfair to draw conclusions about performance.

Once a basic level of process is in place, you can look at time spent beyond what is typical for a task of a given size or on tasks that did not complete by the end of a sprint, as shown in light green and orange in the chart above.

The critical thing to keep in mind here is that incomplete work and estimate overruns are almost always caused by things outside of the engineer’s control. Common culprits include incomplete requirements, lack of timely code review, and, as we will see next, technical debt.

Technical Debt

Perhaps the greatest problem that interferes with on-time task completion is technical debt. Tech debt occurs when there are deficiencies in systems, tools, code quality, and test automation that make engineering harder than it should be.

In addition to assessing how much estimate overrun and incomplete task time is caused by technical debt, it helps for senior engineers to do a qualitative assessment (e.g, good, fair, poor) of the most common areas:

- Test Automation - How easy is it to make changes without causing significant bugs in unrelated code? How much manual regression testing is required for each change?

- Development Environment - How much time do engineers spend just setting up code to run, and how frequently do problems arise in production that did not show up in the development environment?

- Continuous Integration/Continuous Deployment (CI/CD) - Do engineers have to spend significant time deploying code, or does it happen automatically? How long does it take for a build to complete and provide feedback?

- Frameworks and Type Checking - Does code utilize the best available frameworks and type checking systems to prevent common types of mistakes?

- Architectural Coupling - How easy is it to make and test changes in isolated parts of the system?

- Documentation - Is it easy to find information about how existing code is supposed to work (including comments and good naming)?

While this list is not comprehensive, it can quickly give you a read on whether developers are likely to struggle with productivity in the code base.

Another approach for assessing technical debt is to ask each developer what percentage of their time they would save if tech debt did not exist. This can be done as a one-off survey or on a regular basis to get more accurate numbers.

If the tech debt burden is high (over 20%), then managers should strongly consider resolving the top issues before judging a lack of progress on customer-facing features. This is especially true because tech debt disproportionately impacts more junior engineers, who are more likely to be from underrepresented groups, and less likely to realize and report the extent of the problem.

The Bottom Line

Fairly assessing engineering performance is difficult due to the complex nature of the job. Too often, performance problems are caused by a lack of proper support rather than individual issues.

In this article, we outlined several ways to identify roadblocks that impede engineering productivity.

By applying these practices, not only can you make engineering performance reviews more fair, but you can eliminate problems to unlock future growth.

If you’d like to learn more about fair performance reviews on your engineering team, we’d love to chat.