Process Maturity Scorecard

Other high-level reports like Project Cost Allocation and Sprint Best Practices help you visualize the overall flow of engineering effort into task delivery, but don’t directly answer the question: What should I do?

The process maturity scorecard report performs an extensive list of checks for best practices across the whole software development lifecycle to highlight areas that could benefit from improvement, giving you an actionable to-do list.

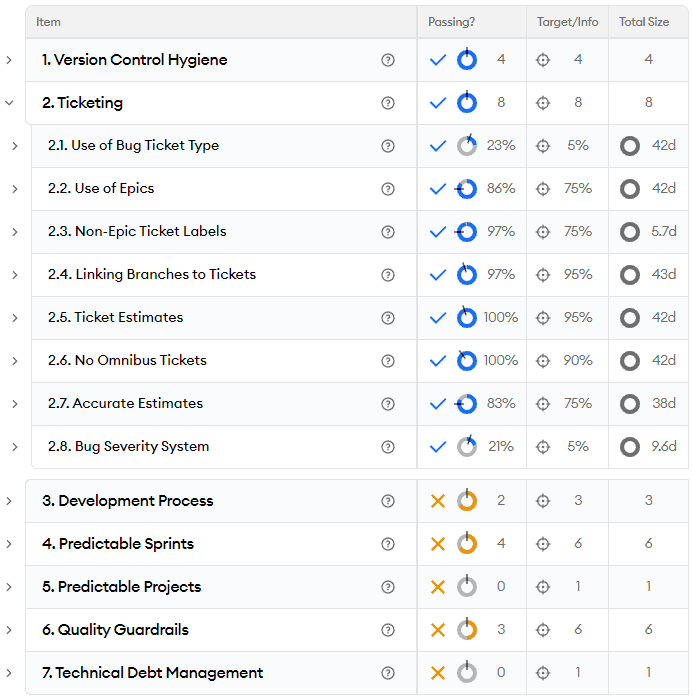

Here is a screen shot of the scorecard report with one of the categories expanded:

Most of the scorecard metrics are denominated in “Dev Days,” which is the work time spent following each best practice based on minware’s unique time model.

For example, the “Ticket Estimates” check looks at how much time is spent on tickets that have a story point or time estimate set. Rather than just counting tickets that meet the requirement, minware looks at the portion of time spent working on tickets that meet the requirement, which inherently prioritizes best practices by business impact and significantly reduces noise.

Each check will look at the portion of activity that follows the best practice and compare it to a target threshold. The thresholds are based on what is reasonable to expect on a healthy team, and have varying levels depending on the type of check. For example, linking branches to tickets is easy to do almost all the time, so its threshold is 95%, while the threshold for working on tickets in epics is 75% because using epics most of the time is important for visibility, but it isn’t a problem to skip some of the time for isolated tasks.

Metric Categories

A detailed explanation of each scorecard check is beyond the scope of this documentation, but you can view the Full Scorecard Metric List for information about what each check looks at and why it is important. Here is a brief summary of what the scorecard report covers in each metric category:

- Version Control Hygiene - This category has basic checks to ensure that people are creating branches and pull requests for each task, which is important for overall visibility and report accuracy.

- Ticketing - This category has basic checks related to how tickets are filed and linked to code. This is primarily important for visibility into what people are working on and the nature of their tasks, but the estimate accuracy check in this category also looks at predictability from a ticket perspective

- Development Process - This category looks at whether people are updating tickets as they work, but also at overall flow efficiency, which impacts predictability and throughput by measuring the impact of context switching.

- Predictable Sprints - This category checks for anti-patterns that show up in both the Project Cost Allocation and Sprint Best Practices report where people are working off sprint or sprints have issues with rollover, incomplete tasks, work added after start, or not meeting the commitment.

- Predictable Projects - This category focuses on predictability at the project level. All the earlier checks can pass and you can still have problems with project completion if scope and requirements are not well-defined. Here we look at best practices related to project delivery.

- Quality Guardrails - This category is very important because one way you can (and some teams actually do) optimize predictability and delivery is by shipping buggy code. Here we look at bug rates, churn, and bug load to identify issues with quality that may be impacting capacity for roadmap work or customer value.

- Technical Debt Management - Finally, it’s important to keep technical debt under control while improving in other areas. The impact of technical debt can be difficult to isolate when looking at overall productivity, and here we look at whether teams follow best practices for managing technical debt.

How to Read This Report

There are a few important considerations when assessing information in the scorecard report.

The first is that there are a lot of metrics here, and it doesn’t make sense to tackle all of them at once. The metrics are roughly ordered from low-level to high-level checks. You should focus on the lower-level checks first because they provide essential visibility needed to address higher-level checks.

For example, the checks for using branches, using branches for each task, linking branches to tickets, estimating tickets, and estimate accuracy form a natural progression where you need to pass each check in order. If only a small amount of activity is passing earlier, then the later checks will only look at that small amount of activity and will be less meaningful (e.g., we accurately estimated the 10% of work that was ticketed!)

Next, the way you look at the overall scores may vary depending on the size of your organization. If there are only a few teams, then org-level metrics will be informative. However, if you have 20 teams and a top-level metric is passing, it could be that each team is passing, or that most teams are doing really well and a few are failing badly.

We recommend expanding each check at least down to the board/team or grouping the overall report by project or board to independently assess each team.

Finally, you can run the scorecard report over any time interval, and it defaults to four weeks. For most checks, this is enough time to average out random variation, but certain checks like bug load might make sense to assess over a longer time interval to smooth brief periods of higher bugs right after a big release.

It can also be informative to run the scorecard on historical time intervals to see where you’ve made progress and which areas have lingering problems.

When to Use This Report

It is helpful to look at the scorecard report as part of strategic planning to identify top priorities for each team.

Furthermore, because the scorecard report provides direct, actionable insights, we recommend that teams review it at least monthly. This ensures that no new issues pop up unexpectedly, but is also valuable because fixing earlier scorecard checks can change the result of later checks. It’s important to reassess priorities after each change rather than try to plan them all in advance at the beginning of a strategic planning interval like a quarter (which wouldn’t be very agile!)

Who Should Use This Report

Because this report is more focused on teams and direct actions, we recommend that first-level managers review it with senior managers to set priorities and track progress, but that senior managers focus primarily on higher-level outcomes in the Project Cost Allocation and Sprint Best Practices reports while giving teams autonomy over their specific improvements to meet those goals.

We also encourage individuals and teams to look at the scorecard on a regular basis (at least monthly) to see if the results match their expectations and they are making progress. It can also be really helpful for people to see how other teams or people are succeeding in areas where they struggle to identify learning opportunities.

How Not to Use This Report

You can expand the report to see whether checks are passing at lower levels like people, sprints, epics, etc. We only recommend doing this as a way to isolate the root cause of failures at the team level and above, rather than to assess scorecard failures at a lower level when the team is passing. The reason is that issues may disproportionately affect lower-level entities due to random variation and less data, or because certain people bear the brunt of things like bugs. That being said, a few basic low-level checks like not committing to a main branch really are an individual responsibility and may make sense to assess in this way.

In a similar vein, we strongly advise against comparing people and teams to each other. Software development is not a competition, and it’s unfair to compare performance across different parts of the code base, business requirements, seniority levels, etc. The right way to use the scorecard is to identify opportunities for each team to improve relative to their former selves, not their peers.

Finally, we do not recommend that senior managers dictate the priority of certain scorecard items across teams, because the organization's end goal should be delivering high-quality software on time; the scorecard checks are only a means to that end, and their relative importance may vary across teams. The only exception here is certain low-level checks that serve other goals like security or financial tracking, such as not committing to main branches, doing code reviews, and linking work up to tickets and epics.

The Bottom Line

The scorecard report is where the rubber meets the road in minware. It provides guidance on exactly what teams should do to improve their productivity and is the most useful tool in setting priorities.

While the scorecard report covers a lot, here are other use cases where another report would be better-suited for your needs:

- Project Cost Allocation - The scorecard report provides a useful snapshot of whether significant time is being lost in different areas, but the checks are essentially pass/fail. The Project Cost Allocation report helps you see the aggregate impact of all the scorecard improvements on productive time and track progress over longer periods.

- Sprint Best Practices - The scorecard report identifies specific problems with sprints for each team, but similar to the time allocation report, Sprint Best Practices shows the total quantitative impact of all your improvements over time.

- Sprint Insights - The scorecard report allows you to drill down to some degree into individual items like tickets, but the Sprint Insights report provides a more granular view and detailed timeline that helps you understand the context surrounding each ticket and is better for assessing the root causes of specific failures during sprint retrospectives.

If you’re following the walkthrough series, we recommend reading about the Sprint Insights report next.